Those of us who create sound for videogames long for tools that make more efficient use of our time and resources. Videogames have been in existence for almost 30 years, but it has only been recently that off-the-shelf software tools and technology have become available to game developers to refine their production pipeline. When it comes to game audio development, producing assets has never been a problem; however, integrating these assets into a game has always been a challenge, especially when dealing with multiple platforms such as Sony PlayStation 2, Microsoft Xbox, Nintendo Gamecube, etc.

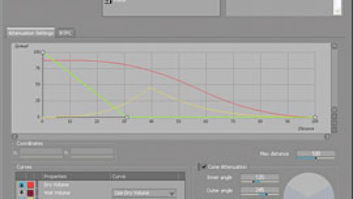

Editing the sound propagation of 3-D sound objects in the Position editor

For the most part, game developers have used their own proprietary tools or platform-specific ones such as SCREAM (Sony) or XACT (Microsoft). Unfortunately, when it comes to producing games for many platforms, some companies are left with the unimaginable option of, literally, hacking audio into their games to meet deadlines, in addition to the programmer-dominated mindset of building tools as needed, which amounts to editing text files. Many developers lack the dedicated resources to develop a comprehensive, multiplatform authoring tool designed with the sound designer in mind.

With the introduction of next-generation consoles such as the PlayStation 3, Xbox 360 and Wii, game audio development has become much more complex, in part due to these platforms’ powerful capabilities, which provide sound designers the hardware resources needed to produce a very immersive and rich interactive audio experience for players. While many great titles have used proprietary tools with success, the need to develop games across many platforms with tools that allow the sound designer to have full control over audio integration has, until now, been a major issue, considering all of the bottlenecks in the process of completing audio development in a timely fashion; enter Wwise from Audiokinetic (www.audiokinetic.com).

Wwise was developed more than six years ago with the goal of making audio integration easier across multiple platforms (currently for Windows, Xbox 360 and PlayStation 3). The Wwise philosophy is based on the idea that programmer involvement should be at an absolute minimum (although they are not off the hook completely).

The Wwise system comprises two main downloadable packages: a highly optimized sound engine in the form of an SDK (software development kit) and the Wwise authoring tool. In addition, Wwise offers a downloadable user’s guide and several video tutorials. I was immediately impressed with the documentation, which was very thorough and professional. Although the guide — with its nearly 600 pages — seemed initially daunting, it is indexed well and presented in a very systematic way, making learning relatively straightforward.

The nature of game audio production is such that programmers are still needed, albeit now in a very reduced capacity. To begin to use Wwise with a particular game project, a software programmer is needed to “connect” the SDK to the game engine. The game engine is the core software component of the videogame, keeping track of all actions in the game and handling graphics rendering, physics, collision detection, etc. It also communicates with the sound engine. Once connection is made, the game’s function calls can “talk” to the sound engine in real time and apply all necessary playback functions, audio processing, etc. At this time, Wwise requires a PC running Windows XP. Installing Wwise and its components was straightforward, with one extra step requiring the latest Microsoft .net framework. Note: Mac users, fear not! I was able to run Wwise on a MacBook using Bootcamp.

Within Wwise, the sound designer/composer creates objects, such as sounds, that are triggered when certain game-data conditions are met. These triggered sonic events can be several sounds with complex properties assigned to them. For example, I assembled a rifle shot sound that comprises several parts in sequence — the initial plosive “bang,” the tailout of the shot in sequence or in layers along with a boom layer, all being triggered as actions by a single event.

In addition, you can create a corresponding event so that when the game sends a function call to that event, it causes an action to occur, such as play a sound.

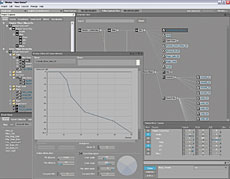

The Wwise application uses a hierarchical structure, in which there are two top levels: the Actor-Mixer and the Master-Mixer. Wwise uses “parent/child” relationships so think of the Actor-Mixer and the Master-Mixer as the parents, allowing each section to have its own parent/child relationsips. Within the Actor-Mixer level hierarchy, you have containers, sounds and additional Actor-Mixers. Containers are similar to folders that have properties and behaviors associated with them. Let’s say that I want to trigger the appropriate footsteps based on the type of surface my player is walking on. I would use a switch container that would “switch” between a group of concrete footsteps and a group of grass footsteps. I would also create an event called “Play_Footsteps.” When the game detects that my character is walking on a concrete surface, the game would send out a function call to trigger the “Play_Footsteps” event that would switch from the previous surface and play the concrete group of sounds. There are other types of containers, each with a particular behavior associated with them.

The Master-Mixer level hierarchy allows the designer to create new groups comprising control buses that group together all of the sounds, containers and Actor-Mixers within these buses, resulting in a final mix. A typical example of the type of groups within Master-Mixer would be dialog, sound effects, ambience and music. You can use events, which can be triggered to apply sweeping level adjustments, such as voice ducking, and effects to each particular group or to all groups.

The videogame industry has recently seen a lot of changes, including the announcement from Microsoft that it will release low-cost development packages for amateur game designers with the goal of distributing these games on Xbox Live. There are also more game development companies using off-the-shelf technologies and tools, such as Epic’s Unreal 3 engine, Id Software’s Doom and Quake engine, and so on. With Wwise, Audiokinetic has definitely hit a home run, making Wwise arguably the best audio middleware package to date. The latest version of Wwise, 2006.2.1, has many new features such as workgroup functionality, environmental effects, multilistener support, voice priority management, schematic view and batch editing capabilities. Wwise also provides tools for prototyping with the SoundCaster and the Game Simulator, performance analysis using profiling and real-time editing.

It’s no wonder that Microsoft Game Studios (MGS) recently signed a long-term licensing agreement with Audiokinetic. Shadowrun, the highly anticipated title from FASA Studio, is the first title to take advantage of Wwise in a production environment. This will prove to be a trend as other game development studios are sure to follow suit.

Michel Henein is a freelance sound designer, consultant and entrepreneur in Phoenix.