LOS ANGELES, CA—There are unique issues associated with creating or recreating realistic audio in the virtual world, challenges that are not easy even for established audio companies. One ambitious L.A.-based startup believes that it has a solution.

Channel-based surround sound formats have attempted to deliver truly immersive environments with ever-increasing numbers of speakers, all the way up to Tomlinson Holman’s 22.2 configuration. But as Henney Oh, CEO and co-founder of Los Angeles-based spatial audio company G’Audio Lab, observes, “No matter how much you increase the channel count, the resulting sound is not truly and fully three-dimensional. Physically, it can be hard to place speakers in various locations around the listener, including above or below. Trying to project sound in between the speakers is also inherently imperfect.”

On the other hand, he says, VR audio is enjoyed via headphones. “This method of delivery has highlighted binaural rendering as a critical piece in VR audio, and a team that can provide high-quality binaural rendering will excel in the industry,” he predicts.

In binaural rendering for VR, three-dimensional sounds are translated into a two-channel audio signal. Once the distance and direction of the user relative to the sound source are determined, Head-Related Transfer Function (HRTF) data is applied, rendering the source sound as though it is coming from the specific position that the HRTF data represents. “All of this happens in real-time, making it a very difficult process filled with complex calculation,” says Oh.

The core members of G’Audio Lab initially became known for developing binaural rendering algorithms that minimize computational power while retaining sound quality. Once those algorithms were adopted as part of the MPEG-H 3D Audio international standard, it was clear that the binaural rendering technology could be utilized in any situation where listening required the use of headphones—and as virtual reality has captured the attention of consumers and developers alike over recent years, VR has emerged as an obvious candidate.

As VR has become more popular, Ambisonics’ B-format has emerged as the popular audio format for a number of content developers, in large part due to the relative ease of capture with available 360 microphones and the believable ambiance it provides as a snapshot of spherical sound. Major players like YouTube and Facebook support Ambisonics since it is adequately effective at expressing 360 environments.

“It’s not perfect, however,” cautions Oh, “since Ambisonics portrays a blurred sound with some directionality as opposed to pinpointed sound sources. This is why representing mono signals with individual sound objects to achieve precise localization is becoming the favored approach. A mono signal is like a raw sound that any filter or effect can easily be added to, while an Ambisonics signal is a whole ambient sound that can become unnatural with any additional mixing and mastering.”

A combination of objects and Ambisonics signals most accurately mimics nature, where a multitude of specific sound objects is paired with an overall ambient sound to create the entirety of an auditory scene, says Oh. G’Audio Lab’s authoring tool supports both object and Ambisonics signals as inputs. “A beach scene that is set up in VR using this authoring tool might have individual object sounds for people and seagulls, while Ambisonics provides the general audio for waves,” he offers as an example.

But Ambisonics is not yet completely ubiquitous, he adds: “Dolby, for example, does not currently support Ambisonics signals as inputs. Those same waves would have to be mono or channel signals, which would be perceived as an isolated point of sound by the listener.”

Audio artists working in VR have been frustrated by the lack of a uniform format, standardized player platforms, the diversity of end-user gadgets and paucity of end-to-end workflows. “Everything outside of the actual creative process is incredibly frustrating,” says Oh. “The least they can be met with are intuitive tools and fantastic output quality while they make artistic tweaks. These two simple requirements—ease of use and quality output—are not easily satisfied, though.”

In VR, even if users aren’t exercising their ability to walk around, they can look in every direction possible. The most basic work with VR audio is therefore accurately matching sound to the visuals, which dynamically change in real-time.

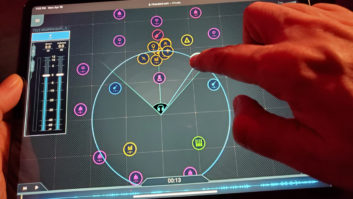

But until very recently, commercially available digital audio workstations have largely not supported synchronized 360 video, much less the ability to visualize the position of sounds relative to the picture. “G’Audio provides a Pro Tools plugin that audio engineers can use to assign positional data to sound sources by intuitively recording source movement on a video view,” reports Oh, referring to the company’s Works product.

“From a logical perspective, it seems like this should be standard practice across the industry,” he comments, yet there are major industry players that do not offer such a video feature. “They only provide a view of the sounds in a basic computer model of 3D space. Audio engineers are then forced to imagine how the audio will sound when it’s played with the video, which creates a significant barrier to creating quality content,” he says.

“When compared to the solutions available for creating 360 video, tools for producing truly immersive sound still have some catching up to do. However, there’s been an overarching shift in the industry to focus on audio, and I’m confident we’ll see huge strides made in the months to come.”

G’Audio Lab

gaudiolab.com