I listen to conventional, terrestrial FM radio—in the bedroom, bathroom, kitchen and car—on average about three hours a day. Making radio sound as good as possible on such a wide variety of systems is both a technical and a political challenge, requiring a range of expertise and diplomacy second only to tax-code reform or audio mastering.

A broadcast engineer’s priorities range from the continual challenges of live programming to creating consistency between sound sources from around the world to balancing seemingly mutually exclusive management demands, such as ensuring that the station has a unique sonic personality and is as loud as the competition. Similar to the volume wars faced by mastering engineers, radio engineers have seen their levels pushed beyond the limit of “nice.” A decent playback system reveals commercial radio’s brute-force solutions that (with the exception of compensating for automotive background noise and worst-case junk radios) shouldn’t be necessary on modern sound systems.

Although the sound of commercial radio is offensively consistent, the bottom line is that I can listen to public radio for longer time periods without fatigue. That said, public radio’s more neutral/less-aggressive approach reveals inconsistencies where the voice-to-voice disparity can be frustrating at times, especially with news programming. The difference between broadcast and music mixing is that a recording engineer would try to reconcile the disparities between host and guests, or between local and network inserts. Radio operators have their hands full—the priorities of being live and not missing a cue (and having dead air) is all too great.

Wake, Wash and Drive

While at home, I listen mostly to news/talk on bass-challenged clock radios, which, while mediocre at best, provide the low-frequency filtering that should be part of the host mic’s signal chain. My car has respectable bass response that can make music a pleasurable experience, until the DJ/host comes on—muddier than two kids playing in the spring thaw. I’d rather not sacrifice bass notes for host clarity—that should be done at the station—and I’d also rather not have to grab tone controls while driving. For music, I usually listen with the loudness on and the tone controls set to flat. (For commercial music programming, I’ll reduce the treble as much as 4 dB while adding a similar amount of bass.)

My three-hour daily airwave diet has prompted me to generate a punch list of potential sonic improvements for all broadcasters, the “research” for which is detailed at mixonline. In a nutshell, the issue addressed is the spectral disparity between talk and music. Hosts should not have more low-frequency energy than a bass guitar or kick drum.

Unnecessarily Up-Close and Personal

Everyone loves to work a mic’s proximity effect for warmth, but this also generates excessive low-frequency energy that confuses dynamics processors into pushing the level down, reducing intelligibility in the process. These five basic challenges explain why compressors “see” bass better than we hear it.

1. Human hearing is bass-deficient.

2. Extended LF reproduction requires large woofers.

3. Acoustic spaces that support LF response are the exception.

4. Monitor positioning is optimized for convenience first and rarely for best response.

5. Powered monitors should have the ability to compensate for the previous four challenges—not just to achieve “flat” but should also offer a Loudness button—or, better yet, loudness compensation integrated into a monitor-level controller. (I realize this suggestion is blasphemous and well outside the box.)

Broadcast engineers should encourage hosts to increase their vocal-miking distance to at least 12 inches. A highpass filter should be applied to remove subsonics below 80 Hz, followed by a shelving equalizer to reduce LF energy below 160 Hz. The host mic should be gently compressed so it’s competitive with heavily processed music (our industry’s most egregious faux pas, Auto-Tuning). If possible, try inserting EQ (a broad, mid-boost centered between 2.5 and 3 kHz) into the vocal compressor sidechain.

So How Does Radio Do It?

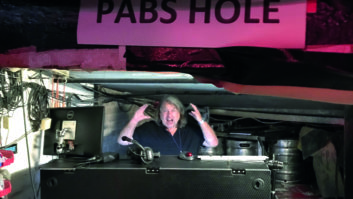

I approached two public radio broadcast engineers with questions to see what life is like on their turf. Both engineers were ready and willing to provide perspective.

Technical production supervisor at WUNC (North Carolina) Peter Bombar says that what technicians might view as obvious proximity effect, a host might hear as “warm,” which projects to their listeners as “appealing,” just like a recording artist’s need to be comfortable with their sound. The mic on Dick Gordon (host of The Story) is a Neumann U87. Frank Stasio (host of The State of Things) favors an Electro-Voice RE-20 as do Joe and Terry Graedon (hosts of The People’s Pharmacy). The signal path for the U87 is an analog Wheatstone console with no EQ or compression, directly to Adobe Audition, using Genelec 1030A reference studio monitors.

For a program like The Story, the majority of the interviews are through an ISDN system from remote studios that vary from “very good” to “very not so good.” Bombar explains that his hardworking staff must meet daily deadlines, focusing on achieving a meaningful and purposeful interview more than on allowing time for “mastering” or equalizing various source material.

Kyle Wesloh is manager of production and operations at Minnesota Public Radio (MPR)/American Public Media (APM), where their host mics are the Electro-Voice RE-20, a Shure KSM-32 (with “popless VAC” windscreens) and a vintage Neumann U87 (for national shows). Regional host mic settings (with or without highpass filter) are flat (no EQ, no compression). National programs use Great River preamps and EQ, and Crane Song compression. Settings are unique to each host. All of their shows use the Axia Element modular console. Both engineers must maintain consistent operating levels as set by APM, NPR and PRSS (National Public Radio and Public Radio Satellite System), some of which can only be truly achieved in post-production.

Going Off-Air

It can be argued that radio is not for golden-eared audiophiles, but I do believe it’s possible to raise a station’s low-frequency energy awareness simply by having at least one full-range monitoring system in house. Studio speakers with 6-inch woofers are amazing for their size but are down 6 dB (or worse) at 47 Hz, when at minimum they need to be up by that much.

These suggestions are intended as a starting point (salt and pepper to taste). For those concerned about maintaining warmth, the primary point is that target audiences listening on a minivan stereo or clock radio may not—or should not—hear the EQ changes. If the engineer makes corrections and/or creates presets to tackle the more egregious daily “problems,” the other processors in the signal chain can do a better job—with less gain reduction—while improving intelligibility and level consistency, all without sledgehammer signal processing. Once the host mic’s excessive low-frequency energy is tamed, the multiband processor can be re-adjusted to restore warmth (but not rumble or mud) to the overall program. Who knows, it might even improve ratings!

Eddie Ciletti is the pirate radio captain of his neighborhood “ship,” as well as at www.tangible-technology.com.