Editor’s Note: Welcome to Need to Know, a monthly feature where we explain complex topics and demonstrate how they apply to each industry we serve. Need to Know stories appear on our websites and in our magazines.

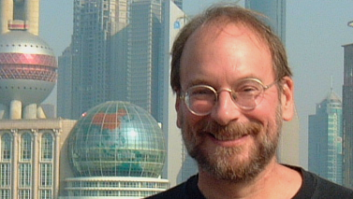

Any discussion of early efforts into the study of artificial intelligence—not just in pro audio, but in general—must include a section on music and technology pioneer Ray Kurzweil. His accomplishments range from the invention of the first omni-font optical character recognition devices to the first text-to-speech synthesizer to the K250, which was the first commercial synth to emulate the sounds of a grand piano and other orchestral instruments to an acceptable performance. He has written extensively on machine learning, including 1999’s worldwide bestseller, The Age of Spiritual Machines.

In 1963, while a teenager, Kurzweil wrote his first computer program, analyzing the works of classical composers and then creating its own songs in similar styles. In 1965, he famously appeared on CBS where he performed a piano piece that was composed by a computer he also had built. Later that year, he won first prize in the International Science Fair for the invention. His work set off a flood of efforts in AI-based composition that continues to this day in web-based apps that will create original music for a project based simply on length, genre, tone and style.

Want more stories like this? Subscribe to our newsletter and get it delivered right to your inbox.

Composition became the focus of AI in music and audio over the ensuing 50 years, ranging from developments like Orb and MAX in the early 1980s to MorpheuS and even recent developments with the Tab Editor for the Reaper DAW. In the past couple of years, companies like Amper Music have emerged to offer free AI-based composition services to web developers and media pros, and research projects like Flow Machines out of the European Research Council have set out to produce the perfect pop album, all based on machine learning.

Concurrently in pro audio, efforts have been made to employ AI to speed up the production process and make it more efficient. Early room tuning exercises, where monitors incorporated feedback circuits to electronically correct for room anomalies, can be considered a primitive from of AI, as can systems like Smaart and SIMM for live-sound room correction.

More recently, pro audio software developers have started to include “artificial intelligence” in their marketing materials. Soundtheory has released Gullfoss, a plug-in dubbed an “intelligent automatic equalizer” that can, essentially, handle EQ decisions based on the music and your taste. Zynaptiq has released Intensity, which the company says is built on facial recognition algorithms to bring out a sound’s inherent detail and allow fine-tuning from a single knob.

While still in its most primitive forms, AI is coming to pro audio. There are shades of the advent of MIDI in that there have been predictions of composers being put out of work, and the emergence of LANDR, an “AI-based, automated mastering service” must strike some fear in mastering engineers.

But for now, in an industry still coming to grips with how to properly create a true 3D sound experience, the promise of AI is exciting while the threats to jobs and creative contributions are minimal. Pay attention, though: AI is coming.

Need to Know More?

Do you have a burning question about AI? Or maybe there’s a particular topic you’d like to see us tackle in future installments of Need to Know. Email us at [email protected] and we’ll put our top minds on it!

Other Industries

• AI and Pro Audio [ProSoundNetwork]

• AI and CE Retail [TWICE]

• AI and Residential Integration [Residential Systems]

• AI and Television Technology [TV Technology]

• AI and K-12 Education [Tech & Learning]