All photos Lucasfilm Ltd./All Rights Reserved. Used by Permission.

Never before — or since — has there been such a concentrated group of influential filmmakers as there was in San Francisco during the late 1960s. The founding of Francis Coppola’s Zoetrope Studios saw the beginning of the careers of director George Lucas and sound designer/editor Walter Murch, among many others. Starting from scratch in 1969, the Bay Area film community would — in less than two decades — become the virtual center of the film sound world.

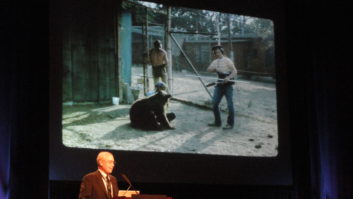

Lucas himself, of course, would go on to direct American Graffiti and Star Wars, as well as to create and produce the Indiana Jones features for his friend Steven Spielberg to direct. He is now finishing the last episode of his six-part Star Wars saga, scheduled to open next May. Just weeks before his induction into the TEC Hall of Fame at AES San Francisco, Mix spoke to Lucas about his deep and genuine passion for all aspects of the cinema experience: audio, picture, presentation and preservation.

I just saw THX 1138 at the Arclight in Hollywood in digital projection and it looked amazing.

This is a good example of why we’re really fighting hard for digital projection. We showed THX in its new digital restoration. The fact that it’s digital means that it will look and sound the same in five years as it does today. They were also doing a Marlon Brando retrospective at the festival and they showed The Godfather with one of the best prints they could come up with. It was horrible, just embarrassing — all scratched and dirty. You see one film that’s 35 years old and it looks beautiful. Another film that’s a few years younger, and was also restored recently, but in a totally photochemical process. A lot of people don’t understand that a week after you put a movie in the theaters [on a film print], it’s starting to disintegrate.

You moved to the Bay Area in 1968 when Zoetrope was founded. In your wildest dreams, could you jump years later to see what would be happening not only with Zoetrope but also with Lucasfilm and companies like Dolby Labs that are based in the Bay Area?

Well, not really. Obviously when we came up here, Walter [Murch] and I were right out of film school, and Francis [Coppola] was in the middle of shooting his third movie [The Rain People]. He was not your hot filmmaker at that point. So we just said, “Look. We’re going to establish a facility and a little world up here that is based on the love of movies, not necessarily on the corporate culture that was taking over Los Angeles.”

We realized very quickly that you don’t need to build a studio [with shooting soundstages]. What we needed to do was focus on post-production because that’s what takes a long time. And since I started out as an editor, I was extremely interested in post-production. We assumed we would shoot our films in the street or we’d go on location. If we needed stages, we’d go rent a warehouse or a studio. But instead of investing in those facilities, that money would go to the highest-quality finish on the films. That’s where you really make or break a movie — I feel that sound is half the experience. Filmmakers should focus on making sure the soundtracks are really the best they can possibly be because in terms of an investment, sound is where you get the most bang for your buck. Starting in film school, Walter and I were very focused on sound and very interested in its power. So that’s really where the centerpoint of Zoetrope came from. The first real investment at Zoetrope was in mixing equipment.

And on those early films like THX and American Graffiti, your involvement was very hands-on in post-production sound. Didn’t you actually do the worldizing [Murch’s concept of recording sounds in real-world environments] on Graffiti together with Walter?

Yeah. In those days, really it was just the two of us and a couple of assistants. The whole post-production staff was about four or five people. And that was everything — sound editing, mixing, the whole thing. We did all of it. I sat on the board — I was Walter’s third hand — and we did it ourselves. That was the way we used to do things in those days, although I think it’s still true of low-budget filmmaking today.

Years ahead of its time, the Lucasfilm/Droid Works EditDroid system offered fast, laserdisc-based picture editing in 1985.

In the days when punch-in recording and high-speed rewind were on the leading edge, you went from being a user to becoming involved in the development of high technology, such as the EditDroid and SoundDroid systems in the early ’80s. Could you talk about that transition?

The first thing you learn in film school is that editing sound is pretty cumbersome. The picture medium was developed in the 19th century and sound in the early 20th century. At that point [in the ’70s and early ’80s], it hadn’t changed much. At USC, we had magnetic recording [which was developed in the ’50s], but there was a controversy about which was the better way to do cut sound: mag or optical. We had people there who were talking about the advantages of cutting optical tracks! I grew up under the tutelage of Verna Fields at USC, who was a sound editor [and later picture editor of American Graffiti and Jaws], and Kay Rose who was a dialog editor. So I came out of a world where understanding the history of how film was developed was very important.

I used a Moviola when I was doing student films and 16mm films in my career as an editor, but at Zoetrope with Francis, we moved into using Steenbecks and Kems and the European [flatbed] methodology, which was far more sophisticated. But it still lacked a lot of the flexibility and malleability that an editor wants, especially because half of editing is archiving and retrieving material, which really falls on the assistant in terms of finding what box a shot is in. It’s a practical, physical reality that you have to deal with. And living with a lot of frustration, I started saying, “I think we can do this better.”

I started putting together a computer division right after Star Wars and as one of the centerpieces of that division, I wanted to build a new [picture] editing system that was nonlinear and disk-based. It was not done like the CMX and other systems that were out at that time, which were simply designed around the offline/online [tape-based] television post process. I wanted to build something that actually included and focused on the art of editing as I learned it in school. When we did the SoundDroid, one of the things we included was the ability to see the striations [waveforms] of everything just like an optical track so that we could use some of the advantages that optical cutting had. You can see where the words are and see where the sounds are and cut accordingly, which a lot of the older editors really thought was great.

How about the transition to actually manufacturing EditDroid and SoundDroid?

When we were about to go to the next level, I realized I didn’t want to be in the hardware business and run a company that built machines and things. We decided to sell EditDroid to Avid and have its ideas incorporated into the Media Composer [picture editing system]. I was very focused on wanting to have an integrated sound system so that the sound editing and the picture editing could be integrated. Unfortunately, I’m still fighting that fight. [Laughs.]

It’s somewhat ironic that even after Avid bought Digidesign, the two platforms — Film Composer and Pro Tools — are still completely different pieces of software and link only peripherally via OMF.

That’s my last real battle, so that when you’re cutting picture, someone can be cutting sound simultaneously and the two systems are compatible, meaning you don’t have to learn two different systems. I have always hired the sound editor the same day I hired the picture editor. I’ve always had the sound editor working alongside the picture editor so that when we’re looking at cuts, we’re looking at things with [proper] sound, so we don’t look at it in isolation. We can incorporate sound editing into the picture editing work and actually look at the cuts with those ideas intact so we can say, “That works.” So when you get down to the end and you have a fine cut of the picture, you usually have your soundtrack cut. You still have to do some of the ADR and Foley and fix some things, but basically, it’s finished. What you see is pretty much the way the movie is going to be rather than, “This will all be great once we get it into the mix.”

George Lucas and composer John Williams relax between takes during the orchestral recording of Star Wars Episode I at London’s Abbey Road studios.

Let’s talk about

Episode III

and the use of Pro Tools in the mix. You have recently expanded the Tech Building at the Ranch adding nine sound design/premixing suites. Can you talk about that and how you envision virtual mixing with Pro Tools and following that through to the final mix of the movie?

The Tech Building was built 20 years ago now and was designed to be a digital post-production building. Unfortunately, it was way ahead of its time, so what we had intended to be computer rooms were used as mag transfer rooms and machine rooms. There were a lot of things going on that the building wasn’t really designed for; we had to accommodate what the rest of the industry was doing. But over the last few years, I’ve been able to push them and with the new remodel, we’ve taken the focus off premixes. We’ll do the premixes on Pro Tools in sound design rooms because they are of equal quality. It’s way beyond just a digital board. There is no such thing as making commitments, which is a huge advantage, especially for a director, because now you don’t have to be there during the whole mix. I simply have to let them — the creative people who I have a great deal of faith in — do their job. You don’t have to live through the agony of it. And you go through the reel and say, “I want that little cricket down a little bit there and I want this dialog cleaned up here.” They can just go in and surgically carve out that particular element and work on it separately without having committed yourself in a premix.

On Star Wars [Episode IV], there wasn’t an automated board at Goldwyn [Stage D, now Warner Hollywood] and you couldn’t punch in on the stereo mix. The mix was a performance. You literally had that 10-minute reel [in real time] and if anything was wrong, your only option was to go back and do it over. And that’s scary. Everything has kind of been designed that way, for that reality, but that reality doesn’t exist anymore. So with those two things, you don’t have to commit in quite the same way with quite the same high stakes that we were playing with before. And that’s a huge thing with everybody. I can remember mixers getting down to about 875 feet and everybody starts really sweating. Inevitably, the hardest part is right in the last 100 feet of the reel and if you screw that up, you’re dead! [Laughs.] You could feel the tension in the room.

If you were to play a 70mm print of the original Star Wars in the theater today, I would think that its sound levels would be downright tame compared to what’s expected of stereo films today. Could you talk about issues of loudness in movies and how digital has maybe given us too wide of a leash with regards to loudness?

As with everything in technology, once the new technology comes in, then people abuse it because they can do things they could never do before. I think we’re getting to a point now where we realize that loud is not better. Everything used to be squeezed into this very narrow bandwidth, which meant the quietest and the loudest sounds were not very far apart. Now we can bring them far apart, but what’s happened, instead of doing that, everybody just pushed everything up to be very loud. I am very conscious of what the audience is going through and you don’t want to have the dynamic range so great that when something else comes on, it jumps out at you. You can build toward it.

The familiar hum and Doppler shift of a light saber is just one of hundreds of Star Wars sounds that have forever been imprinted into the minds of moviegoers.

When everything is loud, two things happen: One, your ears get tired and it creates a kind of energy that maybe you don’t want in your movie. You don’t realize that when you turn it off, there’s a relief, because you’re agitated emotionally just because it’s such a loud sound, like being in a steel factory or something. Two, Walter Murch has a theory that I think is extremely accurate. The moviegoing experience is a social experience, and if the sound is so loud that you can’t hear the other people in the audience — you can’t hear them sigh and moan and jump and laugh and those kinds of things — you’re missing out on the communal experience, which is why people go to the theater in the first place. And so it needs to be at a level where you can just get a sense of the other people around you. You shouldn’t drown out their presence with a lot of overproduced sound.

We’ve been going through a problem on our trailers. We mix them and put them in the theater and you can hardly hear them because everybody else is mixing [trailers] to such a high level. So we’re caught in a quandary of doing it the right way and being drowned out or going on with the program. It’s not a pleasant experience; it’s like a rock concert.

You’ve worked with some of the great talents of the modern film sound era. I’m sure they’ve learned a lot from you, but what have you learned from working with them over the years?

I’ve learned a lot. These are people who are extremely talented in various ways. I’m very good friends with Walter. He is very intellectual, very thoughtful about the way he puts sound together. Ben [Burtt] is like a historian. I mean, he is like a walking library in terms of sound. Gary [Rydstrom] is an artist in his own right, especially in mixing. They all came up through the sound designer school of the holistic soundtrack. I’m a very strong believer in the approach that one hands-on person should be in charge of the soundtrack from the very beginning to the very end of the picture. We’ve had very vigorous conversations and debates about things, where something is placed in the track and how loud it should be and how it blends into the backgrounds and whether it should be a sparse track or a very complicated track in the area of music and sound effects and dialog. It’s very good to have those kinds of discussions about how this works in the real world and how people respond to it. I still think there’s a lot to learn about the aesthetics of sound in the context of a motion picture.

Mix film sound editor Larry Blake is currently immersed in sound editing and recording on Ocean’s Twelve, due out December 10.