This was Mix’s 6th most-read article of 2022!

This was Mix’s 6th most-read article of 2022!

In mid-March, Dolby Laboratories announced that Dolby Atmos Personalized Rendering, the next step to improve the headphone monitoring experience for mixers and engineers creating content in Dolby Atmos, would be entering public beta, with monitoring through a personalized Head Related Transfer Function algorithm tailored for an individual’s unique anatomy—a first for Dolby Atmos.

Dealing with HRTF has proven a long-standing challenge in providing an accurate representation of dimensional audio in two channels, with transducers placed right up against (or inside) the ear. In the late 1950s, the US Air Force was a leader in HRTF application research through its flight simulator programs, where pilots needed to listen through headphones. In the 1980s and ‘90s, pioneers in virtual reality and videogame sound, where localization and amplitude of audio sources are critical to the experience, extended the research.

Still, it remains a challenge. As an audio industry friend once said, “If there are 15.6 billion ears in the world, then there are 7.8 billion HRTFs.”

Essentially, HRTF refers to how each individual perceives sound from a point source, and it’s primarily based on human anatomy—size and shape of the head, shape of the pinna, distance between ears, the ear canal, the nasal cavity, bone density and hundreds of other factors. Humans perceive sound localization and dimensionality by comparing the arrival of the source at one ear, then calculating and comparing its timing as it arrives at the other ear. Infinitesimal differences in anatomy can mean big differences in perception.

Why does this matter? Because creating spatial audio content over headphones requires a process known as binaural rendering, and an HRTF is a core component of the process. Dolby isn’t the only company doing research in the field, but most binaural renderers used by creatives today rely on a default HRTF that represents average physical characteristics. a “one-size-fits-all” model that has the potential to result in widely different experiences between two people listening to the same content. For creatives, that’s unacceptable.

Giles Martin on Remixing The Beatles in Spatial Audio, Part One

“This is very clearly a solution aimed at the professional user,” says David Gould of Dolby. “Dolby has spent many, many years researching the issues involved with HRTF, and at any point where you render to binaural, it has to feel ‘natural,’ it has to sound neutral in timbre and tonality. It can’t sound processed. That is true across all forms of audio playback, certainly, but it’s especially critical in music mixing and videogame sound.

“There are now at least 450 Dolby Atmos music mixing rooms worldwide that we know of at Dolby, with 200-plus committed to coming online soon. This is over 300 percent growth since June 2021,” he continues. “To the creative community—and to our own development team—it’s all about scalability, translatability and trust. It’s about making the spatial experience right for each individual. Now it’s time to get Personalized Rendering in the hands of professionals and get their feedback.”

The process of creating a Personal HRTF (PHRTF) is relatively simple, Gould says, emphasizing that it has only recently been made possible thanks to recent advancements in the iPhone camera, with depth sensing and 3D dimensional scanning built in.

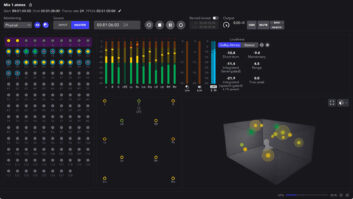

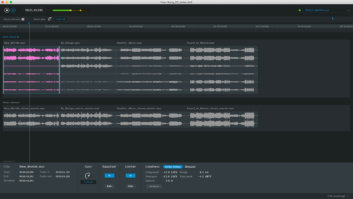

After downloading the Dolby PHRTF Creator app, a user takes a 10-second, 360-degree video of the upper body, then uploads to the Dolby Cloud, where the shoulder, head and ears are broken down into 50,000 points of capture, analyzed and processed. Then download a file (personal to you and able to be loaded from any compatible device, e.g., a co-producer’s laptop) to load into the Dolby Atmos Renderer Application or Dolby Atmos toolset for game development. It’s free to existing users. It works with any headphones.

Right now, the PHRTF Creator app is iOS-only and works only with first-party tools, but this is a public beta, and that is sure to change. The goal is to eventually get the app out to all content creators. The ultimate goal is an incredible spatial audio experience for everyone, from creator to consumer.

Dolby didn’t necessarily invent any new “technologies” in the field of HRTF and acoustic mapping of the human ear with its PHRTF Creator app. Many other companies, and many leading academic institutions have also developed tools and advanced the research. Sony has developed an incredible-sounding solution, though right now it is personalized while attached to a specific room. A handful of plug-ins have appeared recently, modeling the acoustic response of well-known studios for headphone playback..

What Dolby has done is made things personal, going inside each engineer’s head. Where it leads, we’ll see, but the public beta is now in the hands of the creatives, and that usually leads to good things.