The backstage Technical Tour for the annual Grammy Awards—one of the more complex live broadcast entertainment productions—includes many audio stops. It’s a music show with multiple acts and two active stages, but the first stop is typically away from the main action in the two remote vehicles where the music is mixed (in 5.1 surround) before being sent to the main Denali truck, where tracks are combined with podium, announcements and all the elements that make it to air.

There is Wireless World, where frequency coordination for hundreds of channels, from multiple wireless systems and microphone manufacturers, takes place behind the stages. There’s the Front of House pavilion, with multiple desks feeding the arena P.A. installed just for the one night; likewise, the multiple desks at two monitor stations, feeding the performers, each up there on stage for a single song. There are Comm stations throughout the facility, whether Staples Center in L.A. or, as in 2018, Madison Square Garden in New York City.

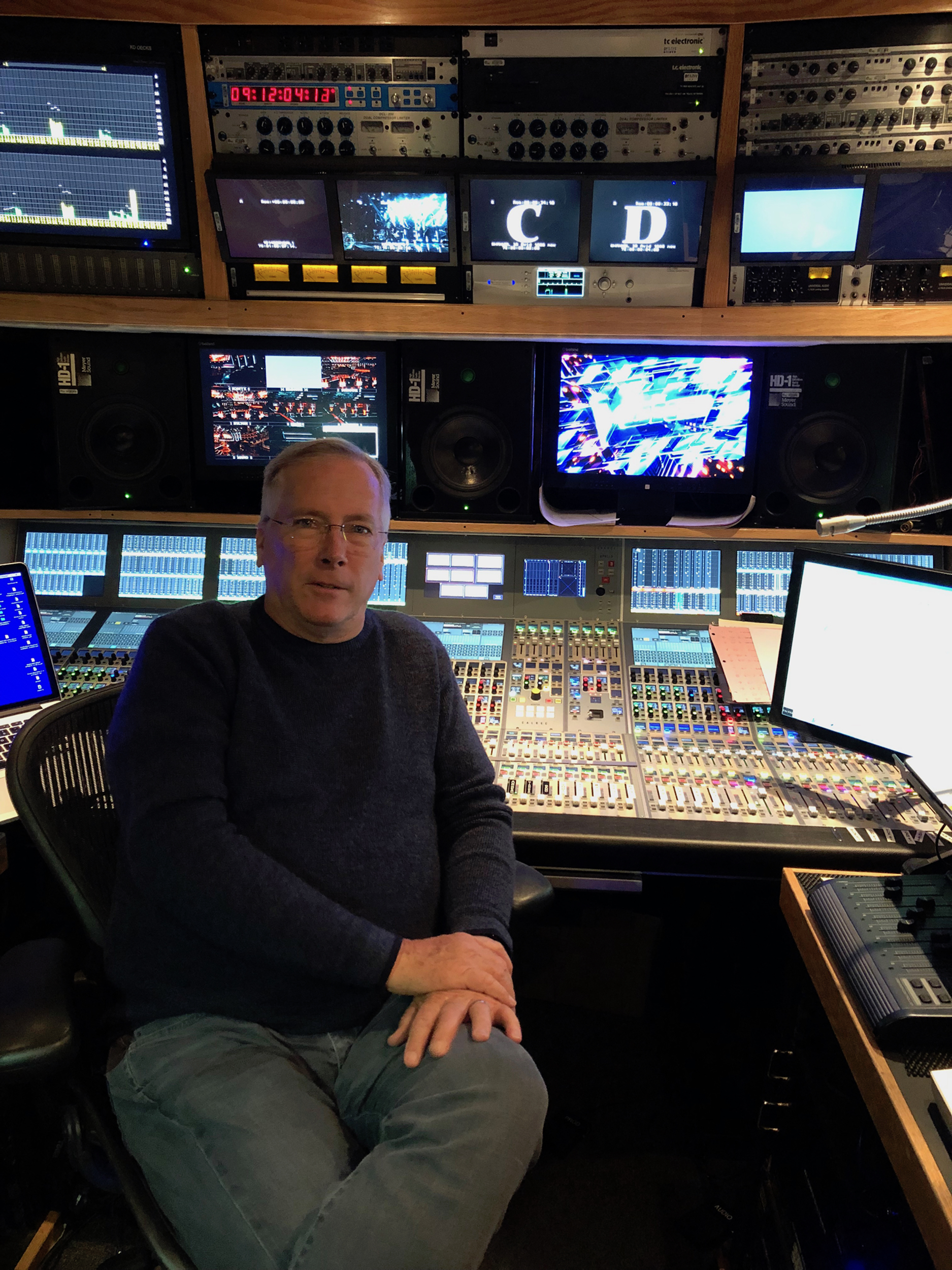

And, finally, there’s the makeshift room littered with tables and laptops, where guests stop to visit with Michael Abbott, the man responsible for bringing all the audio systems together. It’s a hectic week of setup and rehearsals for Music’s Biggest Night; though at the center, Abbott is a portrait of calm. He’s done a lot of big shows over the past few decades, as audio engineer, mixer, coordinator; come hell or high water, the show must go on.

In a long and varied career at the heart of broadcast audio, Abbott has become that rare character who can bring everything together. We thought now would be a good time to check in with him and assess the state of live television audio.

Related: Mix Regional: The Grammys, by Mix Staff, Feb. 4, 2019

MIX: What was 2018 like for you? What shows did you work on?

MICHAEL ABBOTT: The year 2017 ended and 2018 started with the Fox Times Square New Year’s Eve 2017 telecast, followed by the SAG (Screen Actor Guild) Awards, DirecTV Super Saturday Night Live with Jennifer Lopez in Minneapolis during the Super Bowl, 60 Grammy Awards at Madison Square Garden, and the Independent Film Spirit Awards during the first quarter of the year.

From April to July, I mixed the audio for the Streaming Media of the “March for Our Lives” rally in Washington, D.C., with 150,000 people in attendance and millions more watching online; an NBC Project musical reality pilot, Songland, where the premise is like The Voice meets Shark Tank; The Voice live and The Voice Season 14; the 10 Season of Shark Tank; The ESPY Awards; sound design for the MGM/CBS Project TKO; a Shawn Mendes Apple Music streamed concert; and consulting for DirecTV on several 4K TV concerts.

From August through December, I worked on Stand Up 2 Cancer at Barker Hanger that aired live on 70-plus networks and broadcast streaming outlets; I started the tapings of blind auditions for the 15th season of The Voice; in October I started working on a new series, Pod Save America, that broadcasts live on HBO from four cities over four weeks; and from November to December I followed The Voice live season for 15 broadcasts. Now onto 2019, which is shaping up to be another busy year!

How did this all start for you?

I started straight out of high school in the ‘70s building speaker cabinets for a sound company that provided sound reinforcement for touring rock bands. I was taught how to build transformer-isolated mic splitter systems, assemble hand-wound inductor coils for passive two-way crossovers, learned how to identify frequencies using third-octave graphic equalizers and worked as the third man on the sound crew tasked with stacking speakers, AC power distribution and loading the truck quickly and efficiently. The hands-on apprenticeship training led me to being offered the FOH and foldback mix positions for rock, pop, jazz, Latin and classical musical acts, which provided me the opportunity to travel and work around the world for 15-plus years.

In 1982 I started mixing as FOH and stage foldback for various TV projects, such as the 1984 Olympics’ opening and closing ceremonies, Academy Awards, and the Grammy Awards. In 1986, I was hired at the then-fledgling Fox Network, the day after the premiere of the Late Show with Joan Rivers, as a staff mix engineer. In 1986, I moved over to CBS, working as a staff audio mixer at their Television City facility for six years. Starting in 1994, I spent six years working at Paramount Studios on the syndicated entertainment news show Entertainment Tonight and Leeza talk show for NBC.

Working at the TV networks and production facilities provided me real-world, hands-on training and a broad understanding of broadcast audio workflows. During my tenure at CBS, I was assigned to mix talk shows, game shows, sitcoms, soap operas, variety specials, post-production, promo production, sporting events, network news and ENG remotes. I was given the opportunity to work in these disciplines and it provided me with a diverse range of skill sets.

What is the audio setup for the types of TV shows you do? Do they differ considerably?

Talk shows and game show projects are usually staffed with a production A-1, two to three Floor A-2s, a P.A. mixer and, if needed, a foldback stage mixer. These shows vary with their production schedules; a typical four-day production provides for an ESU (Equipment Set Up) of five hours, and we are on-camera and rehearsing with musical talent or stand-ins after the meal on the first day. Then, there are eight to ten hours of rehearsals for the next two days, with a VTR or live show on the fourth day.

For The Voice live shows, we have a production A-1, production audio track playback/recordist, broadcast music mixer, music mix recordist, four to six floor A-2s, a FOH and foldback mixer, two foldback assists, a sound system tech and an RF coordinator monitoring the operation of 40-plus RF devices, 17 audio mixers and techs. During The Voice lives, which run six to seven weeks each Spring and Fall, our production schedule provides for two 10- to 12-hour days of rehearsal for the 15-plus musical performances on our Monday-Tuesday broadcasts.

On show days, we have a technical cue to cue in the morning and a dress rehearsal in the afternoon. The amount of rehearsal time in the schedule the production company provides is an extra production value, which in turn produces the “Big Shiny-Floor Broadcast” The Voice is known for.

Tentpole event productions, such as the Super Bowl, Academy Awards and Grammy Awards, can be staffed with 40 or more audio mixers and techs. At the Grammy Awards, there can be 60-plus audio mixers and techs depending on how many artists bring in their own tour audio stage foldback systems. These techs can be deployed to 7 to 13 mix stations, three performance stages inside the venue and three broadcast mix platforms located outside of the venue.

The Grammy production schedule provides a pre-cable install day, where four A-2s run the fiber and analog mults, one day of ESU that can include, with time allotted, a tech cue to cue set where all the scenic elements are preset, and spike marks are put on the stage. This gives the production an idea of where potential staging issues may arise—audio is onstage coordinating with the stage managers how to best deploy personnel and hardware. For the next three days, there are 10- to 12-hour days of rehearsals with 7 to 10 artists rehearsing each day. On the day of the broadcast, we may start with a rehearsal of an artist followed by a three-hour dress rehearsal.

As the three days of rehearsals are done out of sequence, the dress rehearsal is the first time we see how the sequences between performances with the set changes will work. Inevitably there will be logistical issues that develop during the dress rehearsal; we have a saying: bad dress, great show. After the dress rehearsal, inter-department post mortems are followed by a quick reset for top of show and the broadcast starts shortly thereafter.

What is the workflow like?

The Voice has developed a very efficient workflow in terms of audio acquisition and how we coordinate with the post-production department in providing audio resources for the video edit and audio sweetening of the show. During the blinds and battle portions of the season, these shows are taped and edited. The layout from season to season of the audio record tracks on the 14 XD record decks is standardized to allow the editor’s assistants to easily locate audio tracks in the edit bay servers from previous tapings and/or broadcasts from the past 15 seasons. We also provide a 64-channel DAW record as a safety audio record.

In the event of complex scenic elements and staging logistics for a performance that cannot be set in a segment, we will pre-tape a performance 35 to 40 minutes before the live broadcast. After these pre-tapings, the artist will sometimes request to do an audio remix of the performances in the voice music mix booth. The remixed music audio file is delivered to the production audio booth for integration back into the production 5.1 pre-record. This element is then delivered to our video editor for layback into the performance video and the editor replaces the original audio file with the re-mixed soundtrack, spotting the waveform of the remixed audio to the waveform of the original audio. Then, he will render that file into the video master in the edit bay to complete the audio edit. This integrated DNX file is then pushed back to the production truck server for QC and played out from the EVS as a discrete 5.1 audio mix during the live broadcast. There is a sweetener in the production audio booth that provides sweetening to bridge the in/out of the playout against the live in-studio audience during the broadcast. The above tasks for the audio re-mix are completed in the half-hour before the live broadcast.

Where do you see the biggest changes coming to TV in the next ten years?

Entertainment trucks use MADI for the majority of data transport of audio. The NEP Denali fleet has started using IP-based protocols for mapping audio via the routers and record systems. Dante for audio transport has been deployed in a minimal mode in the entertainment sector to date.

A large I/O count to be properly deployed over a Dante network requires a dedicated IT tech for managing the multiple switches and audio I/O for projects such as a golf tournament or a multi-studio production facility. In the entertainment market, establishing the need for an IT manager is challenging due to cost restrictions and a lack of understanding what the operation of a robust IP network requires.

Adoption and implementation of the AES67 standard will provide integration of audio-over-IP interoperability. This standard will provide for the biggest change to our workflow as we continue the transition from an SDI-based infrastructure to an IP-based infrastructure and workflow.

Consoles for broadcast pull a different duty than for studio or live. What do you like?

I started using the Calrec Q1 analog console in the ’90s, then moved onto the Alpha and am currently on an Apollo. Calrec platforms provide me with the hardware resources needed for the complex audio projects I work on. Eighty percent of my projects are done on Apollo platforms, and it’s used on the majority of the entertainment projects I have been involved with over the past decade.

What does the console offer, specifically?

The replay function on the Apollo console allows me to go from a production mix status to a remix of a post-show fix with a single push of a button. This is especially important when faced with a West Coast re-feed or quick music re-mix a half-hour hour before a live broadcast.

Fader swap is also a big part in laying out input sources on the console, especially when starting a new show. It allows me to re-map the fader assigns on the surface; as the show develops, I will move and consolidate the top layer with only essential faders that I need. Assigning GPIs for AutoFader functions to faders, the available control parameters of this function allow for programming smooth transitions when assigning EFX mics to be triggered by the video switcher.

The Audio I/O resources on the NEP/Denali consoles are substantial. The Denali Silver has an 8192 x 8192 I/O configuration, and with this capability I am able to create workflows that enhance the audio deliverables. In addition to mapping primary mic sources to the audio records, with the I/O resource available on these platforms I can provide secondary mic sources to the audio deliverables with attenuated head amps, which provide the audio post mixers dual sets of mic sources in the event the primary mic is overmodulated. The multichannel embedding provided by the console resource pool has allowed for populating up to 16 audio channels on video record devices, which in turn provides the post-production editor a wider set of audio resources to choose from.

You have been a part of the Grammy Awards for many years. How has the production changed over the past few decades??

I started on mixing the Grammys artist stage audio on a single analog console, on which I used grease pens for marking the settings of auxes and fader EQ for 18 to 22 performances. Fast forward to today, to eight engineers and mixers for the stage audio alone. The use of digital consoles has allowed for higher channel count per performance. We have six to seven artists who bring their stage foldback console that we have to provide for mic signal distribution, and this distribution is still in an analog format. As there are at any given time eight-plus mix consoles in play, this factor alone provides for a complex matrix for connectivity. We are constantly looking at solutions for audio signal distribution at a cost-effective basis compared to the current analog platform.

As far as awards shows in the broadcast market, there is already a paradigm shift of viewers taking place because of the availability of alternative programming offered by streaming outlets, which in turn is diminishing the audience for the broadcasts. Streaming media is going to force producers to think outside of the box in next few years. A reset on how these productions are produced is going to be required in order to better skew towards the streaming media and the coveted 18-49 age demographic that producers and advertisers are looking for.

Do you think that TV viewers’ audio expectations have increased over the last few years?

My goal is to provide an audio soundtrack with the highest audio quality and resolution for both live and posted projects. With 5.1 broadcasts trying to create the soundfield of the space in which the location of the show is broadcast from, this is one of the aspects that interest me most. When I set up a mix, I attempt to provide a sonic perspective of “the seat in the fourth row from the front of the stage.” That is what an audio soundtrack for a live TV broadcast should be providing.