The ride has taken him through the California school system, where he taught English, long days as a working musician, and a stint as director of A&R for 415 Records, one of America’s first punk and new wave labels. David Kahne is also a Grammy Award winner; he snagged the trophy for his work on MTV Unplugged: Tony Bennett, voted Album of the Year at the 37th Annual Grammy Awards (1994).

Spanning a wide range of genres, Kahne’s clients include The Bangles, Stevie Nicks, Renee Fleming, Sugar Ray and a guy named McCartney, among many others. Current projects include producing an album for Mindy Gledhill, an independent artist from Utah, and scoring a pair of television series, which he will also be executive producing.

But a lot of Kahne’s time over the past couple of years has been taken up with his work creating a sonic environment for National Geographic Encounter: Ocean Odyssey, a Times Square installation designed to give viewers the most dramatic sense of the ocean experience possible. Mix spoke to Kahne, who runs both the publishing company E=Music and production company SeeSquared Music out of Flux Studios on the Lower East Side of Manhattan.

Hello. Let’s start with your setup at Flux.

David Kahne: I run Cubase and Nuendo, and other DAWs, on a custom PC built by the California-based company Vision DAW. My interfaces are Antelope Orions, and all the routing is through MADI. I have a pretty dense hybrid system that uses external analog gear like old Federal Limiters, several Retro boxes and eight different analog compressors. All the analog gear and also the bus outputs of my computer sum into Dangerous Audio 2 Bus+ boxes, and then route through a variable analog stereo bus chain. That stereo mix gets converted using Dangerous Convert+. All of my clocks are Antelope, driven by the Antelope Atomic clock.

For recording, I have some great guitars and synths, a Yamaha C7, and a strong set of mics, including a Lucas 47 and a Violet Amethyst.

We’ll get right to it. How did you get this Nat Geo gig?

SPE Partners, who own and developed the Nat Geo show, were managing an artist I’d produced and with whom I’d stayed friends. They developed the show from the ground up, and I was with them from the beginning.

What were pre-production meetings like?

They started very generally; there was no model for what we were setting out to do. I was taking notes, trying to find sources for sounds. Turned out that National Geographic had great visuals but not very good sound resources. I began finding sources for audio, and I would take the sounds I liked and send them to a couple of the Nat Geo scientists who had some sound backgrounds and ask if they sounded authentic. I’d get responses like, “That sounds like a mating call for the humpbacks, but not a hunting call.”

The other thing that I started trying to do was use sound to re-create the feeling of being underwater. When you’re in the ocean, you’re hearing your own body a lot, for example. One of the most famous National Geographic photographers told me after he went through the experience that the sound was great, mostly because of the feeling. That was gratifying.

Audio gear became more and more important. I got deeply involved in speaker placement, speaker size, how many speakers. On several occasions I had to go to the general contractor to get extra speakers installed in a couple of the rooms.

What does the ocean really sound like?

Sound is vast, and we can’t begin to encapsulate what it is. The frequency range goes way above our hearing, and whale sounds can go subsonic; one recording I have analyzes as 16.2 Hz. I was told that a whale will catch a frequency and stay there perfectly. Maybe they have perfect pitch.

I went diving to get to know the ocean. My cousin, Don Hurzeler, has been a surfer since grade school. After a successful career, he moved to Hawaii and became an underwater photographer. He took me out to many different ocean environments, like a cleaning station—a cove where sharks and dolphins swim through to get cleaned by small fish that eat the parasites and other unwanted organisms off the creatures. We dove everywhere with just masks and snorkels, so I wasn’t going very deep, but I didn’t want to dive with a tank and get the extra noise.

One example of ocean acoustics is that sound can’t travel through the air bubbles. The bubbles are an acoustic barrier. So in a bait ball, where the fish are swarming, the whales swim around below them, in a circle, and let out air. That creates a tube of bubbles around the bait ball. This confuses the fish because the local acoustics are disrupted, and the bait ball pulls tight. Then the whales come up through the bubbles and feed. It’s fascinating and extremely complex.

A sound engineer who lives in Milan, Sabino Cannone, made a lot of the surround sets that we use. His work helped us create ambiences that affect the mood in the rooms. For example, I’d take some shrimp sounds, clean them up and send them to Sabino, who also works on the Nuendo platform. He would then convert the stereo files into surround sets.

Did you record source material, rely on libraries, or a combination of both?

I used many libraries, although I did make an underwater mic and recorded effects in my bathtub and sink. I also found people like Robert McCauley from Curtin University in Australia, who goes into the sea and makes hi-fi recordings. He sent me great humpback sounds, bait ball ambiences, shrimp recordings (shrimp have a very distinctive sound), some porpoise schools and more.

I used Sound Soap 5+, which is a great plug-in for removing unwanted sound. It runs inside Nuendo and is fantastic. It has a “learn” function that lets you find one second of the sound you want to get rid of in the clear, hit learn, let it go, and that sound is gone. And it runs as a plug-in within the DAW, which is so convenient. A lot of the library sounds are pretty messy, and Sound Soap was extremely helpful with all that.

Every oceanic institute has sounds on their sites, and I used a lot of them. Sounddogs [sound effects library] has great porpoises and orcas. By the way, I learned that orcas imitate other animals, including humans; they sneak up on porpoises by making porpoise sounds. Other resources I tapped into were Moby Sound, Cooperative Institute for Marine Resources Studies in Oregon, Woods Hole Oceanographic Institution and Aguasonic Acoustics.

A friend of mine is an avant-garde percussionist. I was recording a piece of his where he puts Super Balls on a stick and drags them across a big box. It creates a resonance that is exactly like a humpback call! Some of the humpback sounds were made this way, which let me get an incredibly clean result. New Jersey’s Ocean Institute gave me the best humpbacks.

My cousin pushes sharks away with his camera—crazy! He told me that he once got way closer to a humpback then you are supposed to get. When the whale blew out of its blow hole, it made the loudest sound he ever heard! That sound is in the sound-only room at Ocean Encounter.

How did you balance music and sound design?

The original idea was that we were going to use sound design elements only. Toward the end of the process, I started adding in some music; a main theme in the beginning and end of show was always there, but I added music throughout the show for more dramatic effect. Sound design still comprises about 90 percent of the audio.

One great example is the sea lion interactive space. As you stand in front of an eight-foot screen, a sea lion comes up to you; you can make gestures and it reacts. There are 87 different gesture clips, and each one had to be scored separately for movement and barking. It took about two months to do the sound design for that room alone, which included not only the sea lions themselves but the ambiences for the whole space.

Classic Tracks: The Bangles’ “Walk Like an Egyptian,” by Gaby Alter, Sep. 1, 2009

Did you mix in your studio or at the venue?

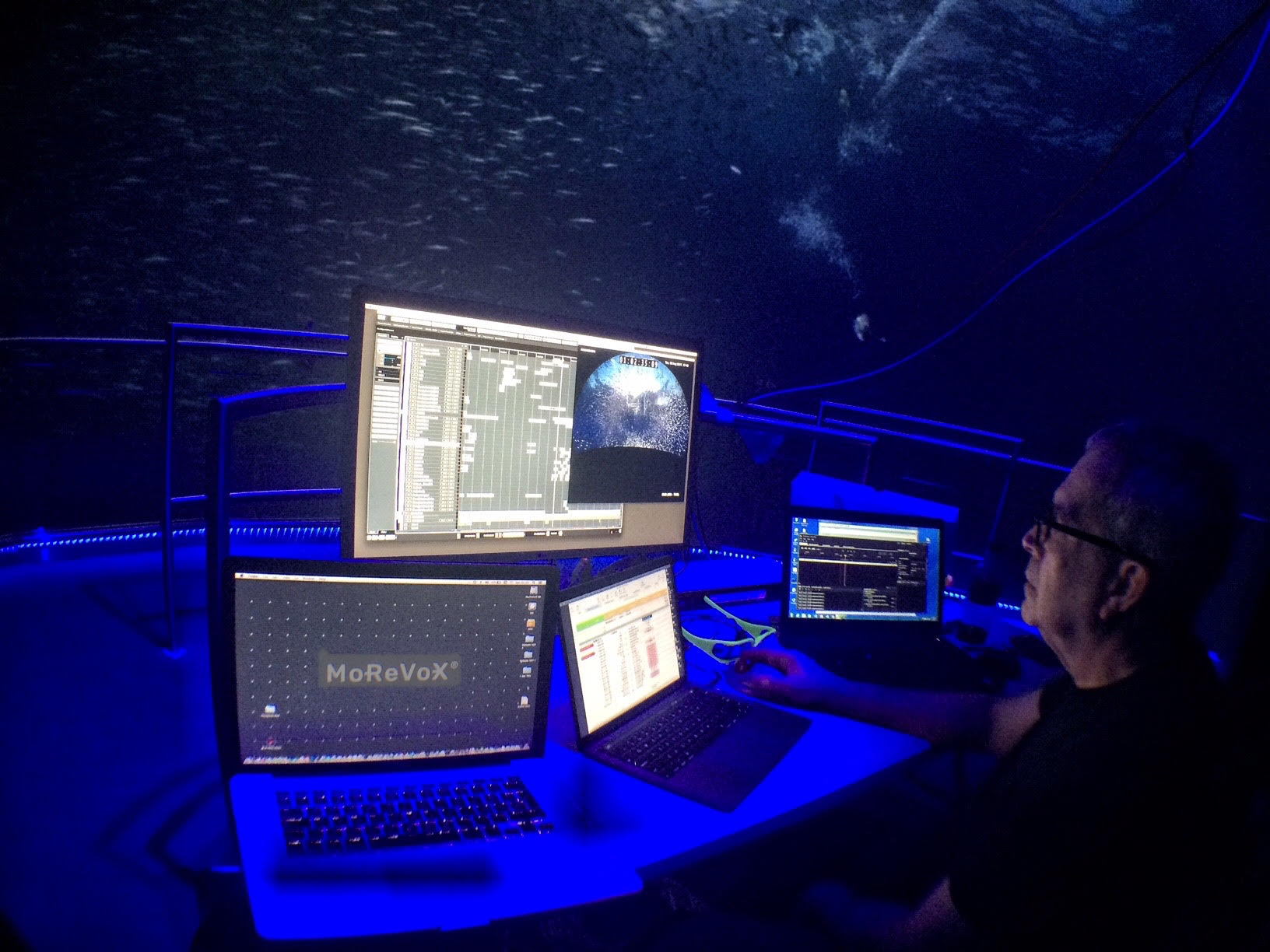

Both. At my studio initially. I set up a 5.1 system and started mixing there using Nuendo and Focal speakers. I would pan around to get a sense of the motion from front to back and left to right, ignoring the fact that playback was eventually going to be in 12.1 or 36.2. I was given low-resolution versions of the visuals, so I had a rough idea of what was going to be playing back in the venue on the screens. The visuals went through some pretty drastic changes with each iteration, so I kept updating the sound to match. But to get a real understanding of how sound would work, I had to mix in the environment itself.

What is the venue audio playback system? Do listeners have headsets? Do they control audio playback in any way?

No, everything is played back through installed speakers. Three of the rooms have big screens. In those rooms I had to use some transducers on the screens; the screen surface becomes a speaker cone. One of the screen speakers is the Novasonar GK60. It’s a screen that has transducers built in. The sound is good up to a middle SPL, but for power, I had to fill in with regular speakers in the room, mostly QSC. The audio system is part of a larger system, and the amplifiers are controlled by a massive overall control system that also runs the lights, projectors and event timing.

The last room is a three-story dome. The transducers weren’t close to enough to power the room, so I put in a Meyer Sound 18-inch subwoofer and two butt kickers on the viewing platform. Then I placed 12 other speakers under the platform and on the floor at the base of the dome, which created enough level to get the apparent volume up without distortion and overdriven transducers.

This scene is an interesting room. When I drove my first sound through it, the material dedicated to the far right speaker came up on the left. I thought the room was wired wrong. But it was just a reflection! You’re standing facing a perfectly spherical half-dome, so when viewers hear some of the sounds in that room on the left, they’re actually coming from the speakers on the right.

There are 24 projectors in that room. It’s a 3D room, so the audience is wearing 3D glasses. It’s a four-minute-long experience, and the screens have to be integrated perfectly.

All of this runs off of servers. In all there are 10 rooms, with racks and racks of amplifiers and servers. The main control system is by QSC. They make very dependable speakers, too, and their amplifiers worked great. Four channels in each amp, and each channel can drive two speaker sets.

Paul McCartney’s Memory Almost Full, Track by Track, by Matt Hurwitz, Oct. 1, 2007

Does the audio follow the listener through the space? How did the environment affect your work?

Yes, the audio does help to lead viewers through the experience. The soundtrack was greatly affected by the acoustics of the environment. I worked up sound in my studio, took it to the space, and would often have to filter a track or recompose a section to get rid of certain reflections by using different registers, durations or volumes. It’s like writing a middle C for a violin and feeling that it sounds wrong in whatever context you’re placing it in, so you try the viola on that C and, hmm, that sounds weird as well. But give that C to the cello and it sounds perfect!

Overall, there’s a ton of high-end reflective surfaces facing each other in the experience, and I had to work with those problems with compositional, design and even hardware levels. Things like moving a more midrange speaker to another place in a room, for example.

One room is only sound; the room is almost dark. It’s a real 36.2 surround environment, with no transducers. I sat in that room for days mixing in 36.2 surround, trying different sound developments and narratives. For example, I put the same 25-cycle tone into both subs and then slightly detuned one of the channels using a mod sweep in increments of a cent. The information from the two subwoofers starts to beat, and the beating of the bass information constantly changes, which resulted in a disturbing sense of the low end moving. I built some rhythms on top of that which were compositional and also moving in the acoustic space in certain rhythms.

Another interesting problem came when I was working on the squid battle. This fight takes place across two screens that are about 60 feet long, one on each side of the room. The screens are concave, and so reflections are bouncing between them—I could hear 12 reflections from one sound.

The squids fight on one screen, then go overhead and end up on the other screen. We put speakers in the ceiling so that when the squids go from one screen to another, I could pan across the top. But because of the reflections, I couldn’t have much movement across. The solution? I used only the floor speakers below each screen and panned quickly across from one set to the other, and it sounded like it was moving with some drama across the room. I ended up using the ceiling speakers for ambience only, and they were great for that because they weren’t pointed at the screens.

Another really unexpected situation came up in this room when I started to add music for when the squids kill each other. I realized, as I added strings, that I had gradually moved roughly into the key of D with just the sound design. I had gravitated toward a tonal center without realizing it. I think as I worked in the room, I was working toward resonance, so it makes some sense there would be some subliminal tonal choices made.

Complete Control Through Nuendo

We asked David Kahne how he managed such a wide array of custom surround tracks and how it all came together. He said:

Cubase is my go-to DAW. For me it sounds the most in phase, and the onboard plug-ins are fantastic. Their built-in sampler is killer. I also use Steinberg’s CC121 control surface, which I love.

I have both Cubase 9.5 and Nuendo 8 on my laptop and DAW computers. When I was working in my studio, particularly on music tracks, I’d work in Cubase and then dump MIDI to audio, at which point I’d bring the audio tracks into Nuendo.

I’ve been a Nuendo fan since they first introduced the product. When I got the ocean project and knew I would be in these huge surround environments, I upgraded to Nuendo 8. It’s a fantastic post-production workstation, phenomenal for surround sound work. The surround compressors, EQs and expanders are automatically ported to whatever surround format you’ve chosen.

Nuendo 8 has a very cool plug-in that lets you randomize a sound file by pitch, time and tone, and lets you dial in the amount of randomization you want to apply. That came in handy a number of times on this project. For example, not all of the porpoise sounds I had could be cleaned up to the point where they were usable. I took the good ones, kept copies of the original and randomized them. There’s a scene that has 20 seconds of porpoise group activity that has been expanded to two minutes, and you can’t tell that all of the sounds derive from the same 20-second audio file. It takes some subtle tweaking, but I got the desired result every time.

The software system that runs the 36.2 room is called Iosono, made by Barco Audio Technologies. It runs exclusively on Nuendo. Iosono has its own computer system and sound routing, and it’s controlled through a proprietary plug-in called SAW (Spatial Audio Workstation). With that software installed in Nuendo, you can pan to any speaker or designated speaker set. Up and down, across the room from front right floor to top left rear. In the sound-only room, I was running 250 tracks of audio in Nuendo on a PC laptop and I had no CPU issues.

Iosono also makes a plug-in for Nuendo that allows you to replace a Nuendo surround matrix with the Iosono Anymix plug-in. It’s a variation on the Nuendo matrix. With Anymix, you can define the space beyond the perimeter of the speakers, and when you pan past the perimeter, volume, EQ and other parameters can be changed/lowered so that the sound feels like it’s moving away from you as a sound would in the real world. It saves hours in the kind of setting of the Nat Geo experience.

Nuendo also worked flawlessly with the Dante system, which was used for the live room mixing in all the rooms except the sound-only Iosono room. Each speaker in each space had a dedicated Dante channel running to it, and Nuendo, working through the Dante software installed in Nuendo, sees each channel and can be assigned to through a surround matrix.

In some of the rooms, I was using up to 15 different surround matrices through the Dante routing, and it worked perfectly. In the final dome room, for example, I ran 300 tracks through 20 different surround matrices in Nuendo, busing out to 24 separate Dante/speaker channels. In that room I was using Nuendo on a Mac Pro laptop, and I just cruised along trying to solve all the crazy acoustic and design and compositional problems, with no problems from the software. All good!