The Holy Grail for immersive audio content creators has long been the ability to deliver to the massive device-carrying, headphone-wearing global audience. Published statistics vary, but roughly two-thirds of the world’s 7 billion-plus inhabitants own a smart device of some sort or other, and nearly all of them consume streaming and over-the-top (OTT) content. Until recently, however, delivering an authentic immersive audio experience to headphones has proven a tough nut to crack.

The solution, perhaps not surprisingly, is binaural. It’s not surprising because binaural delivery has been around since 1881, when audiences in France were able to listen to a performance from the Paris Opera over two telephone lines, one for each ear. Interest in the format waxed and waned over subsequent decades. For a time, broadcast radio dabbled in binaural drama productions. Lou Reed recorded a binaural album, Street Hassle, in 1978.

Then, over the past decade or so, binaural began to make a comeback with VR and AR. More recently, spine-tingling ASMR videos using binaural techniques have proliferated. The ability of the Dolby Atmos and Sony 360 Reality Audio platforms to render immersive mixes for headphone listening, along with Apple Music’s recent launch of its Spatial Audio streaming service, has once again put the spotlight on binaural.

Just don’t call it binaural, says Paul Hubert, founder of Immersion Networks. “The term binaural throws me a bit,” he explains. “I associate it with the microphone technique, which is very limiting from a platform perspective.”

Immersion Networks is one of several technology companies, along with DSpatial and Timber 3D, that have been developing tools for the creation and delivery of 2-channel, immersive audio. To be fair, none of these software platforms offers a binaural workflow in its original sense, that of recording with microphones positioned in the ears of a dummy head. That said, binaural—a word that simply refers to the use of both ears—has become shorthand for the 2-channel delivery of immersive sound.

IMMERSION NETWORKS MIX3

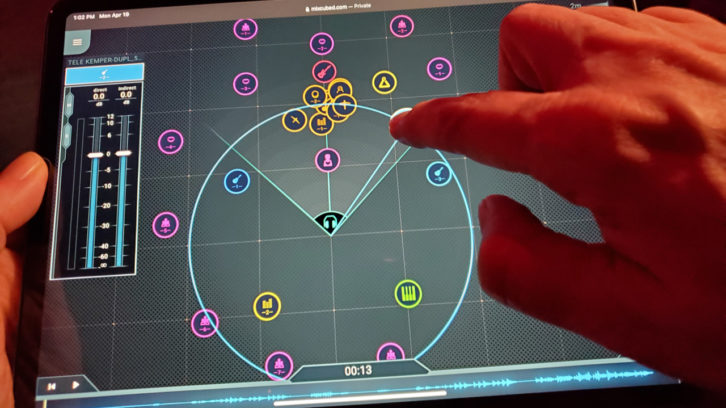

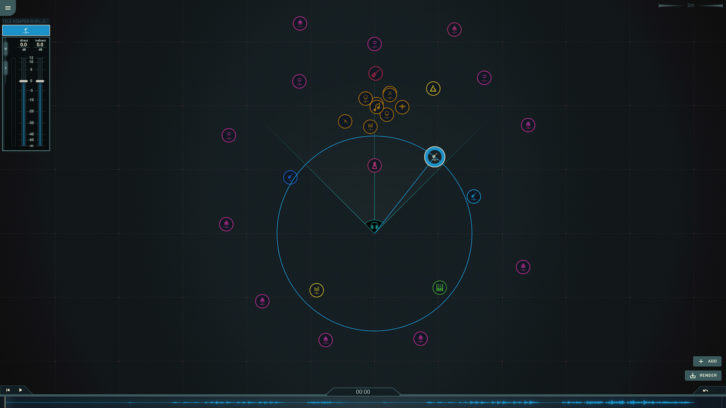

Immersion Networks, founded six years ago in Redmond, Wash., by former researchers from Apple and Bell Labs, recently launched mix³ (mix cubed), a cloud-based platform that enables users to create three-dimensional music or sound design for delivery to headphones. Users access mix³ through a browser from any computer or tablet. Currently offered as a 30-day free trial, mix³ will ultimately be accessible through a tiered subscription plan.

Having uploaded mono or stereo tracks or stems to the cloud, mix³ users simply position the dots that represent them using the intuitive UI. The platform includes automation for dynamic moves.

“We set out to build a system that replaces the pan pot,” Hubert says. “I’ve had people realize that because they’re not putting two things in the same place, they’ve discovered a new way to mix; they’ve discovered all this space. That was by design. Don’t put two dots on top of each other, and guess what? You get this incredible render.”

The platform sits in the cloud on a GPP. “A general-purpose processor these days is faster than DSP and easier to program. It costs ten times as much, but when you’re using a cloud service, you’re only renting a little piece,” according to chief scientist James “JJ” Johnston. An authority on human perception and signal processing, Johnston worked for years at Bell Labs and helped develop the company’s MP3 and AAC codecs.

In a scheduled September feature release, the company is adding headtracking for Apple’s high-end headphones. “Apple has 100 million AirPod Pros and Maxes out there, so it’s kind of the default,” Hubert says. Support for other brands will be added as those companies also roll out the capability.

Axis Audio Opens Immersive Mixing and Mastering Studio

Rather than data-compress the output file, the company worked for years developing highly efficient codecs and other technologies leading up to the launch of mix³. “At the end we do a binaural rendering,” Johnston says. “But we do an acoustic simulation to get to the point where we know what to feed into the binaural rendering. You can do it in a way that allows it to sound a whole lot more natural.”

“One of the comments we’ve gotten over and over is, ‘Whatever I put in sounds better after it comes out.’ People have commented that it’s better than any in-the-box mixing and better than any console, in terms of sound quality,” Hubert reports. Users have reportedly signed up for mix³ from 70 countries. “Anybody who’s got stems or tracks can make a good immersive mix without having to go and buy $30,000 worth of equipment,” says Johnston. “We’re not thinking of democratization, but just making it possible for everybody to do it.”

DSPATIAL REALITY 2.0

Did you see Blade Runner 2049? If you did, then you’ve heard the results, whether you realize it or not, of the reverb engine at the core of Dspatial’s Reality 2.0 platform. Re-recording mixer Ron Bartlett and supervising sound editor Mark Mangini reportedly helped develop the reverb engine, which they used to create room ambiences in the film.

Rafael Duyos, co-founder and CEO of Barcelona, Spain-based DSpatial, is an old hand at immersive audio, having built a 24-speaker setup for his electronic music studio in 1997. A composer and producer, Duyos is also a whiz with electronics and a pioneering synthesizer manufacturer.

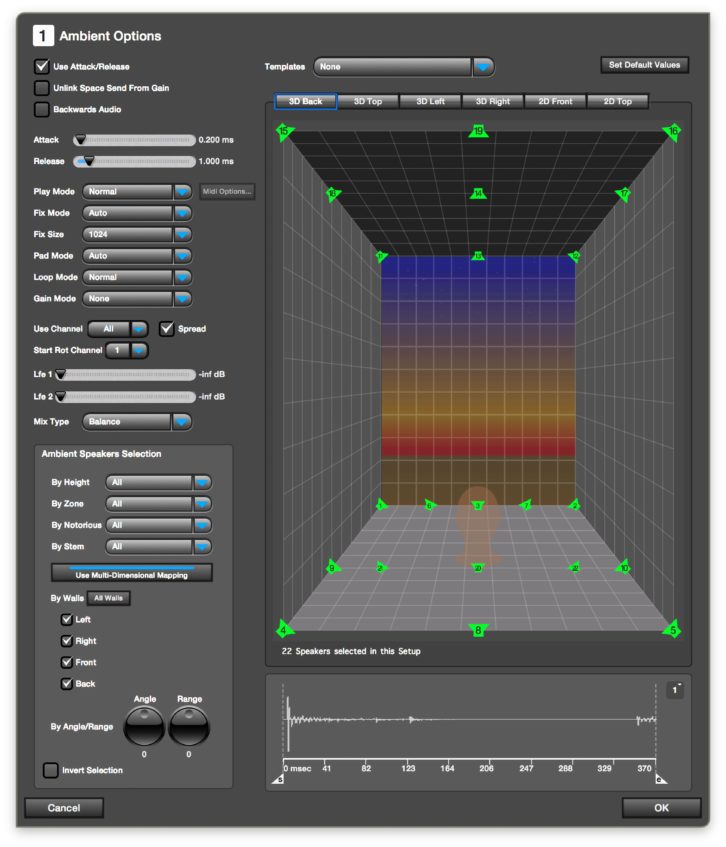

Dspatial’s software platform grew out of Duyos’ desire for realistic-sounding reverb and the ability to simulate any space, indoors or out. The simulated space is applied to every sound source for total realism. For example, if there are two sound sources, “they are in different places; the reflections are different,” Duyos says. “There is distance, so you need to emulate that and add some delay. At the same time, that makes a Doppler effect.”

As development progressed, the reverb grew to become the engine at the platform’s core. The flagship Reality Builder version now offers 96 real-time reverb convolutions and 48 channels of modeled—not captured—impulse responses, plus a host of features too numerous to detail. One unique feature is a door simulation that re-creates the acoustic reflection, refraction, diffraction and scattering produced by walls, objects and doors.

The software sits on top of Pro Tools, Duyos says, and is fully integrated with that system’s automation. Reality works in layers, rather than tracks, he says, superimposing positioning and early reflections, reflections and reverberations, and speaker outputs. “We make a room designer; you decide where your speakers are,” Duyos says, allowing the user to model the monitoring environment. Timber 3D Sfera user interface. That enables a mix started in a room with, say, 24 speakers, to be completed in another room with 12—via re-rendering. One preset includes Japanese national broadcaster NHK’s 22.2 configuration.

The platform is format-agnostic. As the website explains, “Once created, the mix is played back in every format without the need to down-mix… Sound designers can start working in 5.1, stereo or even binaural, and their sessions can be played back in an Atmos stage while all spatial information is retained.”

TIMBER 3D SFERA

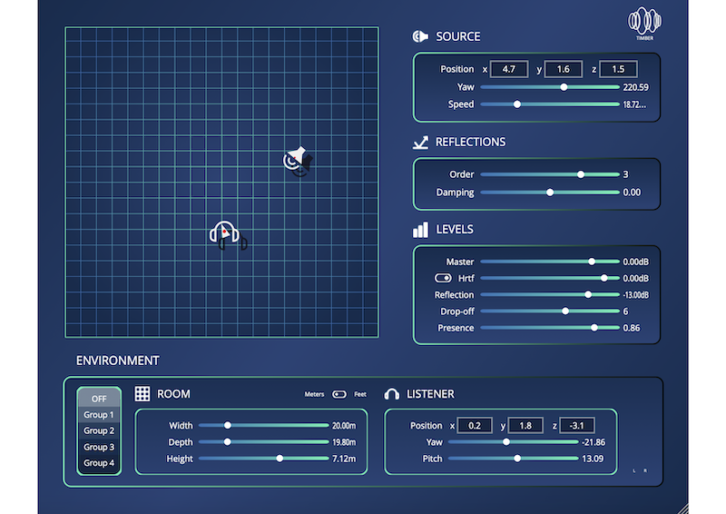

“The people at Netflix call us stereo-enhancers,” says Jordi Alba, who is in charge of international business development for Timber 3D, based in the Netherlands. The company’s Sfera plug-in combines binaural principles with proprietary spatial techniques developed by company founder Peter Bakker, co-creator of Audio Ease’s Altiverb, and CTO Daniel Talma, a 3D sound engineer, to realistically position or move sound sources around the listener.

“We’re replicating the way that humans perceive sound,” Alba says, adding that people working in surround formats most often rely on volume changes to create movement. “What we do is take a mono sound and give it cues to give the properties that give location,” including reflections, reverberation, level, HRTFs, phasing and so on. “What the Sfera engine does is calculate the position. We’re really strong on reflections; our convolution reverb is based on Peter’s experience. And we can deliver really high quality.”

Sfera, available as an AAX, AU or VST plug-in for Mac only, first found a user base among VR practitioners, Alba says. “We said, VR is going to need sound that matches the image. Some people were mixing in stereo. Through our engine we could replicate a surround effect on headphones and on speakers.”

Now people working in television and online are using Sfera. “Most of the guys using our engine start in stereo then use the plug-in to enhance certain parts of the action,” Alba says. “There are certain things you can create in that mix that are really immersive. Why mix in surround when your listeners are online? And you don’t need a decoder.”

The reality these days is that you are mixing for an individual, Alba says. “Most people listen on headphones, and immersive tools like ours can enhance that.”