I have been a professional in game audio for nearly 25 years and have observed its tool progress since the formative years of the now-ubiquitous Unreal engine. (I even helped design the Unreal 1.0 audio engine.) And because of how quickly game technology changes, it has taken nearly that long for pro audio and game audio to forge a relationship.

The association between gaming and pro audio has developed in proprietary ways since the late 1990s, with in-house teams rolling their own tools time and again, sometimes starting fresh with each new project. But only in the last four years has it passed to the commercial sector, with the introduction of Steinberg Nuendo’s Game Audio Connect, now in its second iteration.

For the first time, a game audio engine (specifically Audiokinetic’s Wwise) can interface directly with a pro audio DAW. Don’t get me wrong, this is a really big deal, but the process of going from a DAW music or film format into a truly nonlinear format such as game play is progressing even faster with the explosion of virtual reality and augmented reality.

Why has this taken so long? Games make a lot of money. For a little context, DAW developers tell me that it’s harder to pivot and move in response to new game development environments. (Take a look at how long it took to move the film industry from Dolby Pro Logic to Dolby Digital.) Yet most DAWs’ functionality and environment during this time has mostly stayed the same: tracking window and mixing window.

Game development platforms shift every three to four years, with updates on both the hardware and software side that require significantly more tool support, from scripting to mix control; VR and AR are changing even more rapidly. Therein lies the problem. But there are fundamentals that don’t change, and those fundamentals of nonlinear mixing and audio design, even composition and voiceover, can work their way into DAWs.

About the Video: N-FOLD launched 6/28 as TheWaveVR’s first flagship show, in collaboration with Strangeloop and Flying Lotus’ acclaimed imprint BRAINFEEDER. N-FOLD is a synesthetic virtual reality experience that pushes the boundaries of music, visuals, and social interaction. Known for his iconic visual work for artists such as The Weeknd, Erykah Badu, and Flying Lotus, David Wexler (Strangeloop) brings his trademark psychedelic aesthetic to the realm of VR, taking the user on a cinematic journey that could only exist using VR technology.

VR and AR technologies are being used for more than just games. They are being looked at or implemented already for episodic television, film, theme parks, military training and even new ways to experience music (see TheWaveVR, illustrated above). And once again there’s a disconnect between creating content and experiencing that content in context. Using a DAW, it’s easy enough to sync to a film, but for a game or VR/AR experience, there are other factors at play. Three-dimensional positioning in real time has been a factor for years, and even now there’s a lot of talk about ambisonics vs. binaural or other recording methods for production. But once the content is present in an editor, there’s the context of the environment itself (game or other entertainment) and new factors to consider.

In a game, the player is (nearly) always facing the same direction as the camera and perspective of the game. Therefore, using Dolby and other panning technologies across whatever delivery systems are in place—from headphones to 10.2 and up—will allow positioning based on whatever speed the game turns. If the player is in VR and AR, however, you add the critical factor of head tracking. The players themselves need fast frame rates from the game or other interface for a variety of physiological reasons, and this idea applies to audio as well.

Therefore, panning in a DAW won’t perfectly replicate this tracking and rendering in the game engine. DAWs will need to be able to allow the sound designer/editor/mixer to don a VR headset (HTC Vive, Sony VR or Facebook’s Oculus) and track their assets and mix as the player “pans” by turning their head (head tracking), as well as movement of objects in the environment.

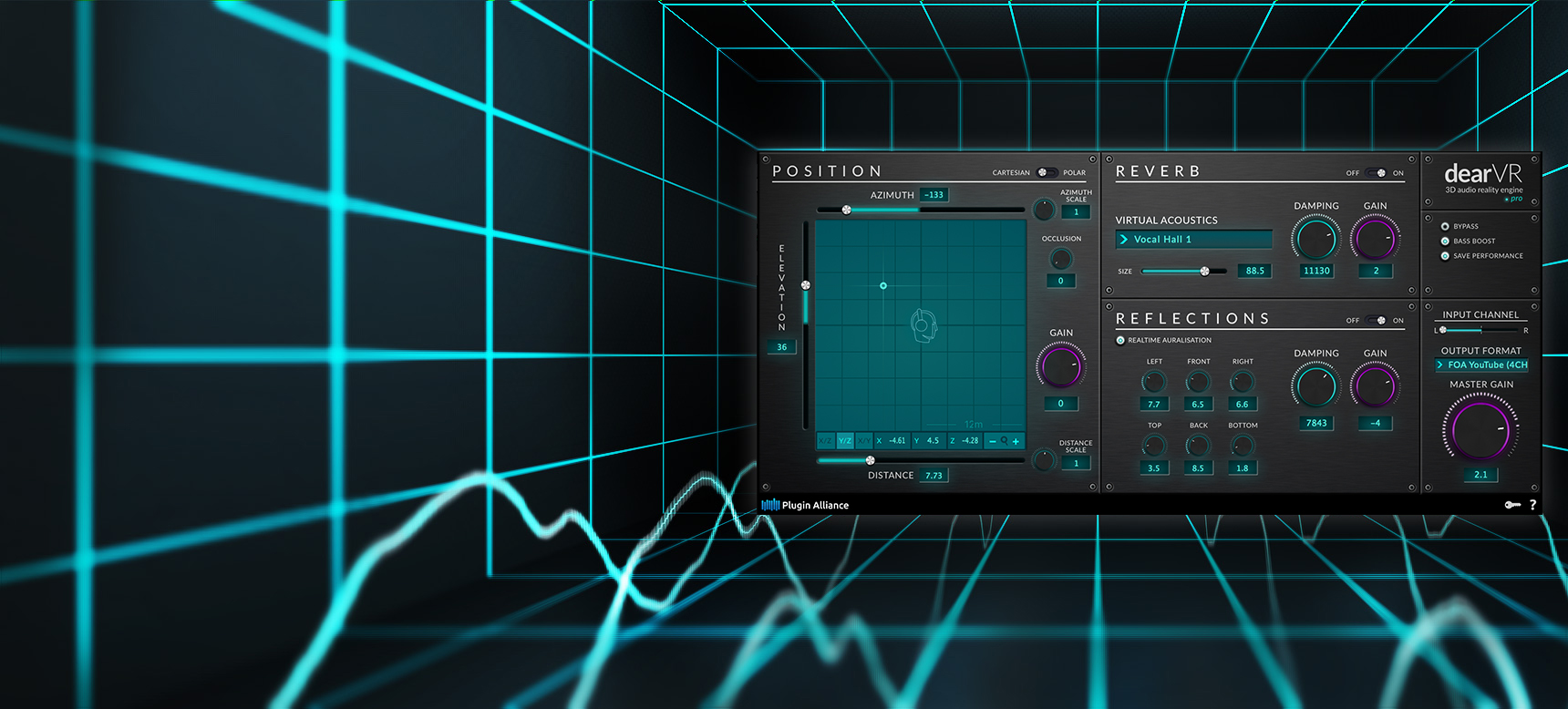

At least one tool has been developed to do just that: dearVR Spatial Connect. This plug-in provides an environment that shows data that can be manipulated using VR equipment. Not only the headset but the controllers can be used to preview audio playback and adjust mix levels. This parallels something that is even now difficult to track in games.

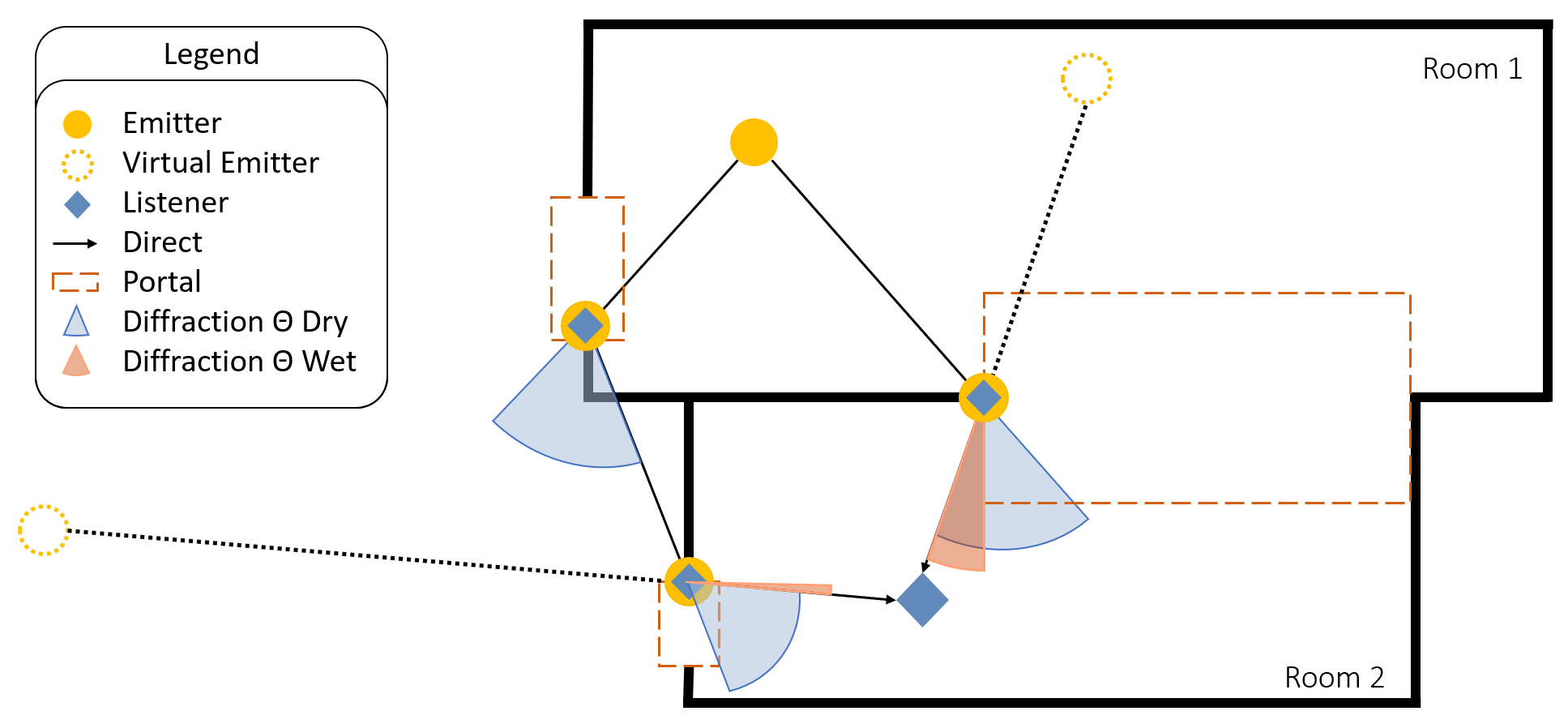

For example, Audiokinetic’s Wwise has had a profiler demonstrating all playing sounds, and every piece of information related to those sounds in a list format. But there is no easy way to see the location of these sounds in the game environment without significant additional work from the game team. The same is true for any game audio middleware: FMOD, Unreal and Unity typically don’t have an audio debug mode that displays easily discernible radii as well as property information near where the sounds themselves are playing.

The solution is clear: linear production is here to stay. No matter what, sounds and music will take place over time. And their playback and initial construction taking place in a tracking environment like today’s DAWs will always have a spot in the production pipeline. But more than tracking and mixing, we now need an environment mode—a way to organize the sounds, music and voice assets we create, and to then hear them in context with appropriate data such as level, EQ, routing, and even a HUD that can visually display 360-degree panning information. This will be the mixing experience of the future. It’s not just a need, it’s a must-have.

We are on the edge of a huge leap forward in entertainment experience, audio pros. Let’s build tools that let us take that leap!